If you’ve played around with any AI chatbots in the last six months, like Open AI’s ChatGPT, you’ve likely found yourself both impressed and alarmed.

Chatbots are far from perfect, but they’ll spew out gobs of info about better building industry services when asked. And at least with basic queries, you’re unlikely to get wildly incorrect responses (in AI speak, these are called “hallucinations”)—chatbots can accurately tell you how heat pumps work or how new insulation could benefit your home, seemingly without breaking a sweat.

We’ll be talking a lot more about AI and the ways that it may or may not be used by insulation, HVAC, and solar contractors. But first, we wanted to see exactly how “smart” chatbots are. Could a chatbot be used by an HVAC technician or energy auditor to diagnose real problems in the field?

Here’s an actual AI-generated image of what that (dystopian?) future might look like!

We leaned on some of our friends and industry colleagues for help, including:

-

Gary Nelson, The Energy Conservatory

-

Michael Blasnik, Google/Nest

-

Joe Medosch, measureQuick

-

Kevin Brenner, Healthy Home Energy & Consulting

-

Bruce Manclark, CLEAResult

-

Eric Kaiser, TruTech Tools

Armed with a list of questions, we opened up ChatGPT4 and started chatting. Then, we asked Dan Perunko from Balance Point Home Performance to help us gauge the AI’s answers. Here’s what happened.

Question #1

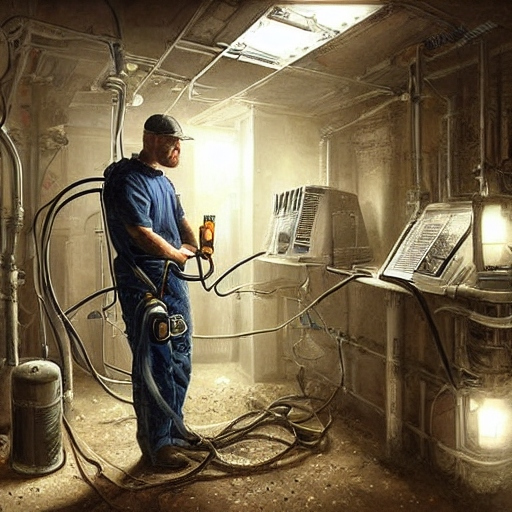

“After a series of sequential blower door tests, in which additional air sealing was done to tighten the building at each step, CFM50 in test #4 increased. What might explain why a building would test from 375 CFM50 to 450 CFM50 after air sealing measures were implemented?”

ChatGPT’s response:

Our expert’s evaluation: As you’ll quickly see, ChatGPT loves a good list. Dan mostly liked this answer, especially that its first suggestion was to check for a human testing error. But he’d reprioritize some of the other possible explanations, moving #3 to the bottom, since the tester would likely already know if there were changes in outdoor conditions.

Question #2

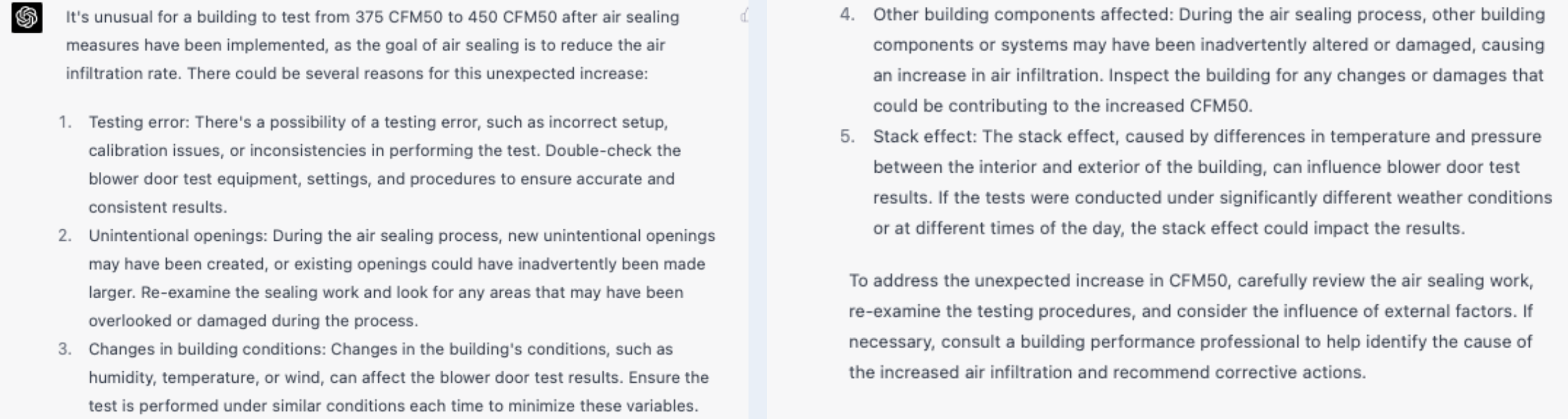

“Based on the following data, what might be wrong with this HVAC system? Suction Line Temp of 67.9F, Superheat of 26.9F, subcooling of 1.2F, return wet bulb of 56.7F, supply temp of 59.2F, estimated airflow of 1744 scfm, TESP of 1.26 in H20.”

ChatGPT’s response:

Our expert’s evaluation: Dan thought ChatGPT should have identified airflow as the primary issue, due to the low supply temp and the high static. The other options were okay, though its suggestion that, because air temp is low, the system might not be effectively removing heat from the space he found sort of non-sensical. ChatGPT fared worse with this question than the previous one.

One of the niftiest things about ChatGPT is its ability to recall information from earlier in the chat. This allows us to follow up on the AI’s responses and continue the conversation, which we did:

Follow Up Question

“Given those performance issues, what would the priority be for correcting the problems?”

ChatGPT’s response:

Our expert’s evaluation: Dan thought the follow-up here was a little better. But again, he didn’t agree with ChatGPT’s order—refrigerant charge should probably be third, not first. The most likely answer to this issue is airflow. System sizing he thought was an interesting answer, but it’s not something a field tech is generally going to correct. And thermostat calibration doesn’t make any sense.

Second Follow Up Question

“Wait a second. The mantra of most experts, like Bryan Orr, is ABC—airflow before charge. And, shouldn’t the building envelope be a much higher priority?”

ChatGPT’s response:

Our response: This answer was interesting because it shows that while ChatGPT can sound quite confident in its initial responses, it’s pretty easy to get it to change its mind—we “bullied” the AI a little bit, and it immediately reordered the list without really addressing why it might have been wrong earlier.

Question #3

“This system is 3.5 tons/btuh. Based on this data, what would you estimate the actual capacity of the system to be?”

ChatGPT’s response:

Our expert’s evaluation: As Dan points out, the AI is doing the formula for sensible capacity, which is not a terrible assumption and is likely to get you quite close to the actual answer. But, it plugs in an incorrect data point—it took the return wet bulb temperature and used it as the return air temperature. So it did the formula correctly, just with the wrong numbers.

(To ChatGPT’s credit, it does note that the answer it arrives at is not physically possible and suggests that there is either a problem with the data itself or system performance. But it doesn’t mention that it created the problem with the data on its own.)

Question #4

“A 5-year-old gas furnace has been operating well, but lately it will blow hot air, then cool air, then hot air, then cool air. It’s never done this before. What might be wrong?”

ChatGPT’s response:

Our expert’s evaluation: Great stuff, according to Dan, just not organized in the right way. A clogged air filter could absolutely be the issue. But thermostat issues would be quite unlikely, so it shouldn’t be the second possibility. And there would have to be a catastrophic issue for ductwork to be the cause, like someone accidentally stepping on and crushing the duct.

Question #5

“My furnace gas valve is not opening. The valve is getting 21 volts to the terminals. Is the valve bad?”

ChatGPT’s response:

Our expert’s evaluation: Dan thought the AI’s response was fun to think about in theory, but when he reread the question, it seems like ChatGPT is kind of over-complicating the question. The answer is “Yes, the valve is bad.”

Overall Takeaway From Our First Test of ChatGPT

Even when asked fairly technical building science questions, ChatGPT is smart enough to provide in-depth responses and to make a number of accurate and sensible suggestions. But a technician would still need to be knowledgeable enough to parse the AI’s answers and separate the useful ideas from the ones that can be ignored.